Artificial intelligence (AI) sometimes has been sensationalized by the media. Will AI and computers replace healthcare providers? Likely not, but the technology has begun to trickle down into clinical pathways and applications – including radiology. In the past decade, we have seen developments that offer real benefits from a clinical perspective.

In this paper, Part I explores the advance of AI and machine learning (ML) applications in radiology. Then, Part II shares the art of successfully conducting AI radiology trials from an operational perspective with several case studies.

Part 1: The Advances of AI and ML in Radiology

Technology Report: AI and ML

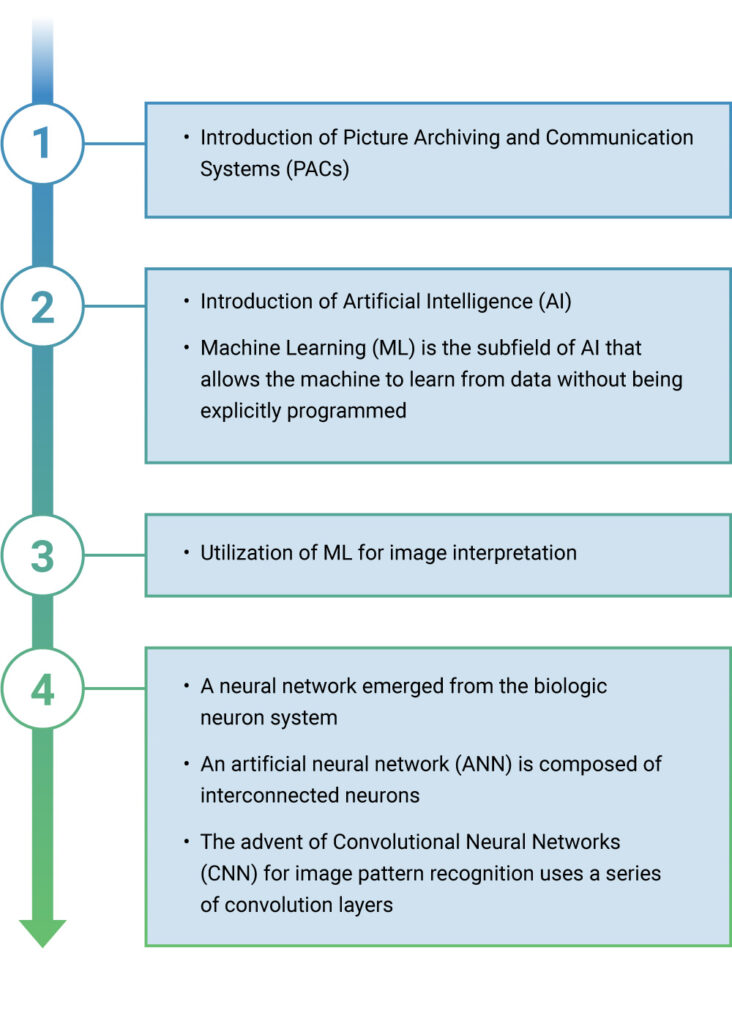

Fifteen years ago, radiologists placed films on a lightboard to read them. With the introduction of picture archiving and communication systems (PACS), this process evolved to using computer systems to move images, interpret results, and generate reports. This advance led to a host of developments around automation and understanding what could be seen in pixels beyond the human eye’s capabilities.

The industry began using ML in mammography for image interpretation. Technology development stalled until the emergence of neural networks. Based on a biologic neurologic system, these computer algorithms formed an artificial network of interconnected computer neurons. Today, the convolutional neural network (CNN) is an extensive, computer-generated neural network that recognizes image patterns.

At WCG, we see two concepts in AI algorithm development that apply to radiology and clinical trials:

Predefined engineered features and traditional machine learning help detect abnormalities in an image. The computer looks for a predefined set of items, including texture, a histogram, or a shape. The computer is shaped with expert knowledge to understand that imagery, make a selection, and possibly make a classification. Classic use detects calcifications or masses on a mammogram, where the computer detects an aberration in pixel density or heterogeneity.

Deep learning uses computer intelligence to assess a finding. The computer finds an abnormality and then uses convolution layers for feature map extraction. For example, a computer algorithm understands if an abnormality on an image meets a threshold representing cancer. This leap in engineering makes these algorithms clinically relevant.

Clinical and Operational Development

Efforts for AI and ML are currently focused on three goals regarding clinical radiology:

- Improve the performance of detection and diagnostic algorithms.

- Improve the productivity of image interpretation by AI-assisted workflows and increase productivity to reduce cost.

- Develop radiomics that integrate the data from imaging into pathology, genomics, and other disciplines to facilitate diagnosis and treatment.

We see development in several areas. As this image illustrates, computer vision can look at image classification at a holistic level, identifying an abnormality on an image, then stopping and letting the human interpreter make a determination. In object detection, the computer finds an abnormality in an image, e.g., finding calcification in a mammogram, so the radiologist can decide whether this is cancer.

In semantic segmentation, the computer assigns each of the pixels on an image and specifically selects abnormalities. In the image shown, all the darker gray items in the liver may or may not represent abnormalities, cysts, or tumors; the computer segments out the abnormality. Finally, instance segmentation allows pixel-level detection to delineate objects within the same class, make a diagnosis if this set of pixels is a tumor, and then identifies it as hepatocellular carcinoma.

Improving Clinical Efficiency

Where do these algorithms fit into the radiologist’s workflow? Typically, we move from acquisition to pre-processing to a host of images put into a reporting queue and interpreted in each of these general areas.

The latest technology offers new opportunities for detection, characterization, and monitoring. These advances can become part of the clinical workflow to help increase efficiency at the patient and provider levels.

Clinical applications include various specialties:

- Thoracic radiology – lung nodule detection on CTs

- Abdominal radiology – characterization and measurement of HCC for pre-op planning and treatment

- Colonoscopy – detection and classification of polyps

- Breast radiology – identification and characterization of tumors

- Neuroradiology – brain tumor assessment, growth, and treatment

- Radiation oncology – segmentation of tumors for radiation dose optimization

Future Development in AI

Where do we see these developments leading? Early efforts in AI showed that human effort superseded AI; computer performance was subhuman, with occasional commercial applications. In the past decade, we’ve seen those curves move closer. The technology is now well suited for narrow, task-specific AI: Can the computer find an abnormality? Can the computer interpret the abnormality? Can the computer give some assessment of treatment?

At some point, the AI curve will overtake the human curve. We see a significant acceleration in computing ability, including hardware and software, and the trend shows that AI should exceed human performance and reasoning for these complex tasks. As an industry, it behooves us to guide its development and understand the limitations so that we can provide the best care for patients.

Part II: The Art of Successfully Conducting AI Radiology Trials

Expertise in AI

Over the past 12 years, WCG’s imaging solution for AI has conducted more than 45 of the largest-ever multi-reader, multi-case (MRMC) AI trials across all therapeutic areas and indications. These trials are large in terms of the volume of reads, involving 10,000-30,000 reviews with expedited timelines. We have achieved multiple FDA clearances on behalf of our clients for AI/CAD/Viewer devices. Our facility is FDA-registered and inspected, and we have ISO 13485 certification for medical device clinical trials.

For these trials, it is vital to source cases that adequately represent the indication. Quality cases need to show “ground truth,” which involves checking the results of ML datasets for accuracy against the real world. We have more than 600 physicians in the practice from different backgrounds and indications – experts who can ground truth in the various therapeutic areas.

Stand-Alone vs. MRMC Studies

In the evaluation of devices, there are two types of studies: standalone and MRMC. A standalone study evaluates the performance of the AI software itself, evaluating it vs. expert truthers’ results. An MRMC study is retrospective, using cases collected from archives.

In a fully crossed, fully randomized MRMC trial, many readers read the same 300-600 cases. From that data come the results on how well the software performs and its ability to benefit the physician. Does the clinical performance of the physician improve using AI software? The purpose of a multi-reader case study is to look at different variations and determine, in an unbiased way, the product’s value.

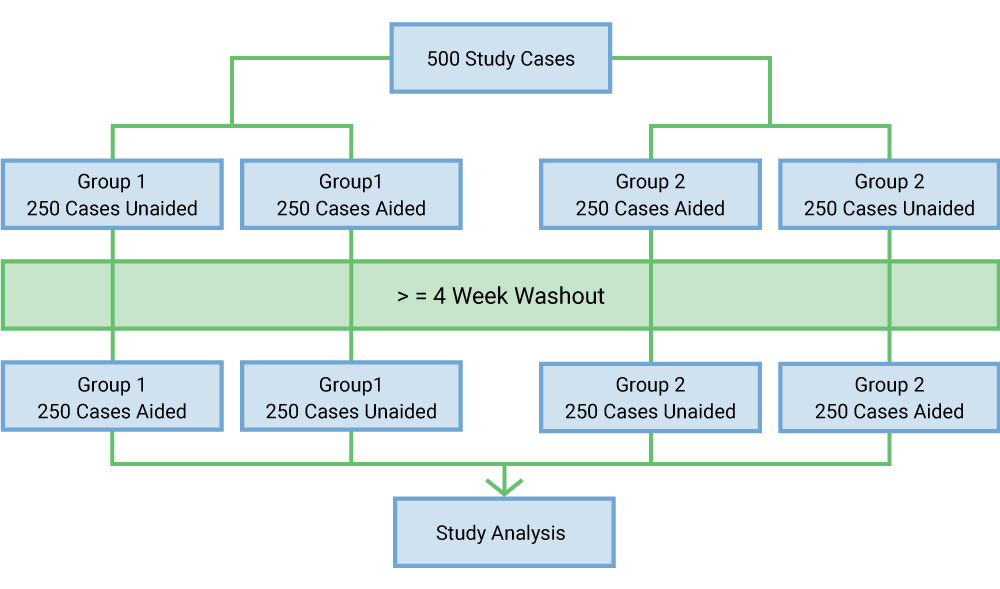

These MRMC studies use crossover. A typical design has 20 readers for 500 study cases that are artificially enriched (perhaps 400 pathological cases and 200 normal cases). In the beginning, half of the group will read without AI assistance and half with AI assistance. A four-week washout period (an industry standard) allows readers to forget their work. When they return, they reevaluate all 500 cases with a crossover, meaning that if a reader were unaided in the first session, they would read aided by AI in the second session.

There is value in creating groups. Many clients have diversity in readers – physicians, radiologists, or physician assistants. Using groups takes a larger number of readers to achieve statistical power, but this approach demonstrates to the FDA applicability of the product across different types of users.

Essential Elements for Success

Let’s review the essentials for conducting these trials. First comes the study protocol; having a pre-submission meeting with the FDA to ensure that they agree with your trial design saves effort down the road. Next, have a clear study plan, but be flexible to accommodate changes. The data collection protocol is the guiding document for collecting cases; remember that you need institutional review board (IRB) approval. As WCG is the largest IRB globally, we can bring that service to AI studies. Cases must be representative; when targeting the US market, ensure the scanning takes place in the US and the standard of care matches the intent.

Form an image processing manual detailing how images will be processed before they’re reviewed. A strong imaging charter defines how the reviewers will assess these cases and is used to train reviewers for minimal intra- and inter-variability. Reviewer selection, qualifications, and training should be well defined in the charter; ensure that your readers match the criteria.

Assessments proceed quickly, with trials completed in about four months, including washout time. That said, managing 10,000-30,000 reads is an art form. Reporting includes numerous data points; plan for many columns of data, depending on the indication.

Case Study 1

This typical trial for mammography involved 24 readers and 300 cases, resulting in 14,400 reads. We established a ground truth for this trial and then conducted the pilot study plus the large pivotal study. Note that there are many trials and opportunities in the mammography market because of the volume of mammograms reviewed.

Case study 2

In pulmonology, we see many images of the lung and the entire chest involving nodules, pleural effusion, and thorax. This trial involved 16 readers and 320 cases for over 10,000 reads. Note the different numbers of readers and cases in these studies. Why aren’t they the same? The client’s statistician determined the numbers needed to achieve power on the trial. In this case, we sourced all the cases for this client, established ground truth, and then conducted the pivotal trial.

Case study 3

The third study was designed around intracranial hemorrhages. This trial was smaller, with 20 readers and 200 cases for 8,000 reads. The images were provided to us, speeding the process. We established ground truth and conducted a pilot to verify statistical power and measure how the software performed. Finally, we conducted the pivotal trial.

Lessons Learned

One lesson we have learned is to follow a methodical, systematic approach. It takes skill to conduct and manage many readers, cases, and reads in a short time. We followed the ISO path because it brought us discipline.

It is also essential to have clear control of the process, ensuring evidence of the separation between development data and study data. Note that the FDA will ask about traceability whether or not the client sources the cases.

It is essential to have reviewer qualifications, training, and documentation in hand. Also, it is important to address data export quality and quality assurance to ensure that the volume of data was processed successfully; the FDA will review. Next, we learned to ensure that the cases represent the indication and multiple scanner manufacturers.

Regarding targeted users, it is helpful to use groupings in these trials, not just radiologists. What is the number one issue that we have seen in trials? Under-powering of the trial – either the number of readers or cases. We have seen many trials redone or extended to achieve statistical power, requiring more time and money.

Conclusion

What is the greatest opportunity for AI in radiology? At WCG, we see the future in radiomics – integrating imaging data with other clinical data. That is a database-level task, and it is difficult for humans to synthesize that much data in a single sitting. Therefore, using algorithms can help synthesize data beyond what an individual can do and present that data in a clinically meaningful manner.

Questions about AI radiology trials? Connect with one of our experts today!